Maryam Shanechi, Dean’s Professor of Electrical and Computer Engineering and Founding Director of the USC Center for Neurotechnology, and her Ph.D. students have developed a new, advanced deep-learning method for brain signals that can perform real-time decoding to significantly advance neurotechnologies. This work has been published in Nature Biomedical Engineering.

Shanechi’s work involves decoding brain signals to develop brain-computer interfaces (BCIs) for treatment of neurological and mental health conditions. For example, the BCI may move a robotic arm in real time for a paralyzed patient by decoding what movement that patient is thinking about, based on their brain signals.

Alternatively, the BCI may decode mood symptoms in a patient with major depression from their brain signals to deliver the right dosage of deep-brain stimulation therapy at each time.

Deep learning, a branch of artificial intelligence (AI), has the potential to substantially improve how accurately brain signals are decoded. However, up to this point, BCIs have largely relied on simpler computing algorithms. For deep-learning methods to become seamlessly applicable to real-time BCIs, they must address multiple additional challenges, Shanechi says.

“First, we need to develop deep-learning methods that not only are accurate, but also can decode in real time, and efficiently,” Shanechi said. “For example, decode a patient’s planned movement from their brain signals in real time, as they are thinking about grabbing a cup of coffee. Second, we need these methods to handle randomly missing brain signals, which can occur when transmitting signals in wireless BCIs.”

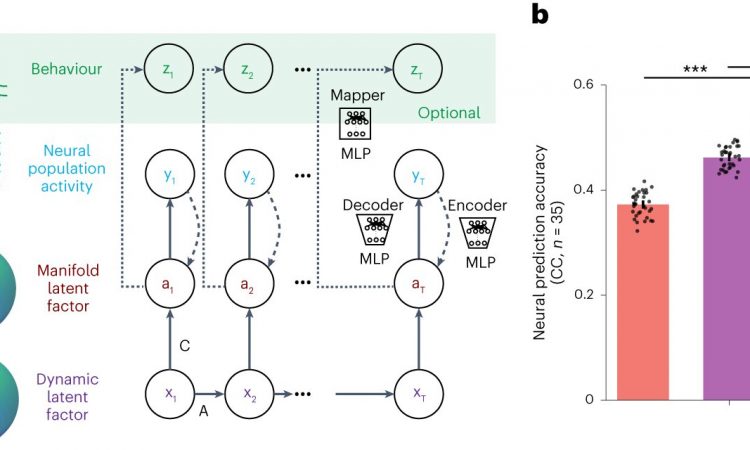

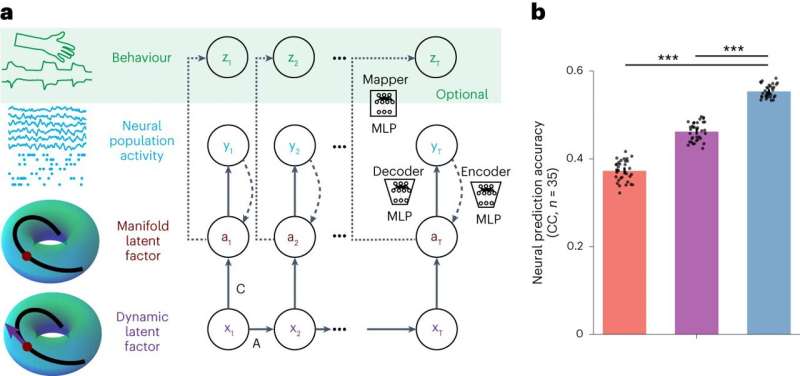

Shanechi and her students, Hamidreza Abbaspourazad and Eray Erturk, developed a new deep-learning approach for brain signals that addresses both of these challenges. They call the method DFINE, for “dynamical flexible inference for nonlinear embeddings.” They show that DFINE accurately decodes brain signals while also being able to run in real time. It can also do so even when brain signals are missing at random times, which can happen in wireless BCIs for example.

Prior to this work, there was a trade-off in models of brain data: “Either the models had better accuracy, but it was difficult for them to decode in real time and/or work robustly with some missing brain data. Or the models could decode in real time but not as accurately,” Abbaspourazad said.

“But BCIs need both accuracy and real-time/robust decoding. This will allow them to help patients with various brain disorders,” Erturk said.

“With this new deep-learning model, we are getting both,” Shanechi added. “We are getting accuracy through the deep learning that captures the complexity of brain signals. But we are building an approach that can do so while also running in real-time and robustly.”

“This work provides advanced deep-learning methods that can be used in real-world neurotechnologies because they simultaneously offer accuracy, real-time operation, flexibility, and efficiency,” Shanechi says. The implication is that in the future we can develop BCIs that are quicker, more precise, and more responsive. This can thus significantly enhance therapeutic devices for people with neurological or even mental health conditions, she said.

The study’s authors include Hamidreza Abbaspourazad, Eray Erturk, Bijan Pesaran, and Maryam M. Shanechi.

More information:

Hamidreza Abbaspourazad et al, Dynamical flexible inference of nonlinear latent factors and structures in neural population activity, Nature Biomedical Engineering (2023). DOI: 10.1038/s41551-023-01106-1

A neural network that enables flexible nonlinear inference from neural population activity, Nature Biomedical Engineering (2023). DOI: 10.1038/s41551-023-01111-4

Source: Read Full Article